Voluntary Poverty

Regression is a choice

Party Like It’s 1899

“The man who does not read has no appreciable advantage over the man who can not,” so says the maxim variously [and apocryphally] attributed to Mark Twain and others. I’m a fan of that formulation because it recognizes human agency in our condition, and it’s also a versatile sentiment that we can apply all across society. Ignorance is, for many people, a choice. And those who choose to be ignorant — those who choose not to learn — have no benefit over those who are incapable of learning. For my money, voluntary ignorance is actually worse than natural ignorance, because there’s something especially tragic about wasted potential. Not to go all Yoda on you, but ignorance — voluntary or otherwise — can lead to other iniquities, most notably poverty. And poverty, much like hate in a Jedi, leads to suffering.

One of the most incredible stories in human advancement in the last half-century is the plummeting global poverty rate. Since 1975, the rate of so-called “extreme poverty” [defined by the World Bank as living on less than $1.90 a day] has dropped from roughly 50% worldwide to less than 10%. This stunning reduction in poverty is obviously the result of increased economic productivity, but one of the drivers of this increased economic productivity has been increased health. Simply put, people are more able to produce wealth for themselves when they’re not stricken with disease, or spending time and resources caring for others who are stricken with disease. Many diseases, e.g., cholera and dysentery, can be prevented by simple hygienic practices like treating drinking water. Other potentially fatal diseases, such as polio, tetanus, measles, and various pneumococcal infections can be prevented by safe, effective, and blessedly readily-available vaccines.

Yet there is a sizable, and seemingly growing, constituency — consisting almost entirely of well-to-do westerners in developed nations — who look at these modern miracles and see the devil.

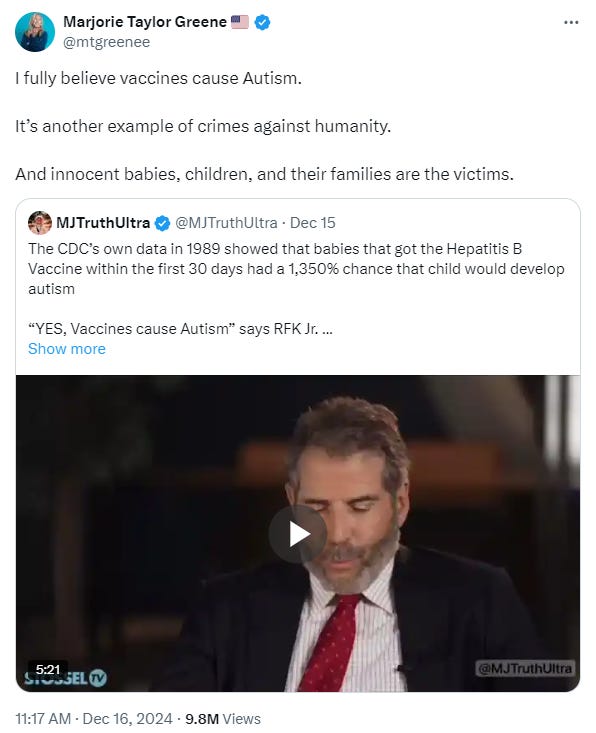

Earlier this week, Marjorie Taylor Greene — an embarrassment to my home state, my alma mater, a sizeable contingent of my relatives [who nevertheless keep voting for her], the Republican party, Congress, politics, and Americans generally — took to Twitter to declare:

She fully believes it, y’see. And I don’t see any reason to doubt her, because she has proven willing to believe any number of evidence-free banana-crackers ideas, including but not limited to: that various mass shootings, e.g., Sandy Hook Elementary School [in which 20 first-graders were murdered], the Parkland shooting in Florida [in which 17 high schoolers were murdered], and the Las Vegas shooting [in which 58 people were murdered] were all staged in order to gin up support for gun control; that Donald Trump was leading a secret investigation into a cabal of Satan-worshipping pedophile elites [commonly known as the QAnon conspiracy]; that the Pentagon was hit by a missile instead of a hijacked plane on 9/11 [another staged event by the government to gin up support for their preferred policy]; that a wealthy Jewish family used space-based lasers to start wildfires in a land development scheme; that the 2020 presidential election was stolen; and that the solar eclipse in 2024 [which was predicted literally centuries in advance] was a “strong sign” sent from God “telling us to repent.”

My instinct is to say that she is aggressively stupid, but I think it’s worse than that. The longer we linger in this political moment, the more I’m coming around that the idea that these people aren’t stupid, per se, they’re just bad people. Believing that vaccines cause autism, or that mass shootings and other terrorism incidents are false flag operations perpetuated by the government, or any number of other provably-false contentions, those are not intellectual failings; those are moral failings. I know people get sensitive about being called stupid for believing verifiably untrue things, but I’m not sure they would prefer being called immoral because of it, either. But it’s certainly something. It’s one thing to be simply unaware of the miraculous nature of modern medicine; it’s something else entirely to invent reasons to hate it.

One of the most unforgivable aspects of Trumpism, to me, has been the glorification of people with genuinely bad, repeatedly discredited ideas — few more egregious than the nomination of another infamous anti-vaccine crank Robert F. Kennedy, Jr. to be Secretary of Health and Human Services. One of the impetuses for Greene’s braying asininity was a newly-circulating video from a townhall that RFK held during his ill-fated presidential campaign last year, in which he said:

“My stance on vaccines […] is, vaccines should be tested like other medicines. They should be safety tested. And unfortunately, vaccines are not safety tested. […] Of the 72 vaccine doses now mandated, essentially mandated, they’re “recommended” but they’re really mandated, for American children, none of them—not one—has ever been subject to a pre-licensing, placebo-controlled trial.”

Nonsense. Every single claim in that sentence is false. For one thing, the “72 vaccine doses” claim is rhetorical sleight of hand to make it seem more scary. It’s actually more like 10 separate vaccines for kids under 15 months old, 12-13 depending on how you feel about annual flu and COVID shots. For kids over 18 months old, it’s more like six different vaccines until the age of 18. Even if you count individual doses of vaccines, i.e., every time a syringe enters the skin, you can get to 30. If you count two flu vaccines and a COVID vaccine every year, I guess you can almost get to 70. But what they’re doing to get to “72 vaccine doses” is breaking out each component of a vaccine separately, even though that’s not how vaccines are administered. E.g., for the DTaP [diphtheria, tetanus, and acellular pertussis] and MMR [measles, mumps, and rubella] vaccines, they’re counting each disease preventative as its own “vaccine dose,” meaning the standard five doses of DTaP and two doses of MMR, i.e., seven shots, add up to 26 “doses” of vaccine. And the argument is “Well, back in the 1960s, kids only got four vaccine doses, isn’t it weird that kids today get so many?” No! Because back in the 1960s, only four vaccines existed, Debra. My own children have been vaccinated against chickenpox, which wasn’t approved by the FDA until 1995, years after I’d already had chickenpox. So yes, kids today get more vaccines than they used to because more vaccines have been developed.

More importantly, the idea that none of the recommended childhood vaccines have ever been subject to a pre-licensing placebo-controlled trial is also nonsense. That is standard procedure for vaccine development, and every single recommended childhood vaccine went through that process. RFK claims otherwise because he apparently has beef with the definition of “placebo.” He claims that only saline and water should count as a placebos, but there are any number of substances that medical researchers consider placebos for medical purposes. Also, medical researchers will, for ethical reasons, often use already-approved vaccines as a control [i.e., placebo] to compare against new vaccines. For example, when testing a pneumococcal vaccine, it’s considered unethical to test a new version of a vaccine against a true placebo when we know that a safe pneumococcal vaccine already exists. Which is to say, it’s unethical to expose people to meningitis and/or sepsis during a vaccine trial when we don’t need to. That means, however, that in some cases, new versions of previously-approved vaccines do not technically undergo true placebo testing, even though the safety concerns have already been addressed. Nevertheless, the claim that none of the currently recommended vaccines went through robust safety trials is simply untrue. But because RFK has an unrecognized idea of what “placebo” means, his argument is basically “that doesn’t count!” Except…yes it does.

All of RFK’s disingenuous hand-wringing about vaccine safety is actually a thinly-veiled effort to conceal his actual belief, shared by Marjorie Taylor Greene et al — that increased vaccination regimens over the last few decades have led to an increase in autism diagnoses. The main basis for this belief, aside from the general human inability to differentiate between correlation and causality, was a study published in 1998 that purported to show a link between the MMR vaccine and autism. In 2004, 10 of the 12 researchers involved in the study retracted their findings. In 2010, the entire paper was retracted, and shortly thereafter it was discovered that the conduction of the study was actually fraudulent. Nevertheless, many people apparently have difficulty separating the idea that an increase in one phenomenon, i.e., childhood vaccination, and an increase in another phenomenon, i.e., autism diagnoses, can be unrelated. And yet.

It’s worth noting that we’ve only been tracking autism cases since the year 2000. Before that, kids with social development issues were mostly just considered…well, weird, or whatever. I personally had several classmates growing up, and I’m sure you did as well, that I assume would have been diagnosed as autistic had such a thing existed at the time. The rate of autism diagnoses in 2000 was roughly 1 in 150 children; today it’s on the order of 1 in 36 — which sounds like a huge increase! But in the intervening quarter-century, there has been a widening of criteria over as well as increased screening. There are also genetic factors, such as older parents — particularly older fathers, which has itself trended up in recent years — that can contribute to instances of autism. Which is to say, it’s not that the ratio of people who qualify as autistic has radically increased in the early 21st century — though there has been a non-zero increase — it’s mostly that 1. we look for it when we didn’t before, and 2. we’ve gotten better at identifying it. And as difficult as it is to definitively prove a negative, we’ve gotten about as close as possible to confirming that there is no link between vaccination and autism.

As with many things in our political culture, everything can seem like a scandal if you don’t know anything about it — or, worse, know just enough to be egregiously wrong.

All that said, I have a difficult time maintaining my outrage toward those who voluntarily inflict this poverty on their children. Because that’s what such behavior amounts to. Similar to how the man who doesn’t read has no advantage over the man who can’t, the child whose parents refuse to get them vaccinated has no advantage over the child who has no access to vaccines. So if parents want to force their children to medically live in the 19th century out of a misinformed fear of autism, I’m running out of reasons for why we shouldn’t let ‘em.

Tom Wolfe wrote an essay called “The Great Relearning,” in which he recounts a trip of San Francisco in 1968 and what he called a “curious footnote to the psychedelic movement”:

At the Haight Ashbury Free Clinic there were doctors who were treating diseases no living doctor had ever encountered before, diseases that had disappeared so long ago they had never even picked up Latin names, diseases such as the mange, the grunge, the itch, the twitch, the thrush, the scroff, the rot. And how was it that they had now returned? It had to do with the fact that thousands of young men and women had migrated to San Francisco to live communally in what I think history will record as one of the most extraordinary religious experiments of all time.

The hippies, as they became known, sought nothing less than to sweep aside all codes and restraints of the past and start out from zero. […] Among the codes and restraints that people in the communes swept aside—quite purposely—were those that said you shouldn’t use other people’s toothbrushes or sleep on other people’s mattresses without changing the sheets or, as was more likely, without using any sheets at all or that you and five other people shouldn’t drink from the same bottle of Shasta or take tokes from the same cigarette. And now, in 1968, they were relearning . . . the laws of hygiene … by getting the mange, the grunge, the itch, the twitch, the thrush, the scroff, the rot.

“Experience is an expensive school,” to paraphrase Ben Franklin, “but mankind will learn at no other.” People occasionally need to relearn lessons that were once uncontroversial, so maybe anti-vaccine types need to experience the effects of their beliefs. If that means a bunch of kids gets imminently preventable diseases like measles, or tetanus, or whooping cough, or diphtheria, or whatever, and some non-zero number of those children then die, all because their parents think RFK “makes some good points,” well, that’s tragic, but that’s the drawback of free will. Better luck in the genetic lottery next time, I guess.

This is all of a piece with my general irritation with people who want to burn down or otherwise dismantle “the system” because they have no appreciation for how well they’ve made out in “the system.” If they want to take a pick ax to the foundation of human development, there’s only so many ways to convince them otherwise. At a certain point, let them touch the hot stove.

Re-examining My Priors

One of my long-standing pet peeves is Daylight Saving[s] Time. For one thing, the term doesn’t make any sense as implemented. Daylight Saving[s] Time is the term we use to describe the period between March and November when sunrises are earliest and sunsets are latest. It doesn’t seem to me that any daylight is being “saved” during that time; it seems more like we’re spending every bit of daylight we can. Meanwhile, the period between November and March when we turn clocks back, thereby making sunset earlier — which to my mind “saves” the daylight for use in the summer — is referred to as Standard Time. It seems more intuitive to me to call the period of shorter days “Daylight Saving[s] Time,” because “savings” indicates some amount of sacrifice to enjoy later, i.e., we set the clocks so that the sun sets earlier in the winter in order to have later sunsets in the summer. And then the period of longer days should be called Standard Time because that’s obviously the more preferrable setup, but no one asked me. Also, the Standard Time Act, which first mandated the semiannual clock changes, was signed into law by president Woodrow Wilson, and my general abhorrence of him requires that I also grumble about his hubris in thinking he knew the time better than the sun.

Mostly, though, I just enjoy sunlight and intentionally changing the clocks so that the sun sets before 5:00 p.m. for two months strikes me as an abomination. I’ve often joked that should a president have the courage to abandon the time change system, I will express my support by getting their campaign slogan tattooed on my face. In early 2017, I proclaimed that if Donald Trump could rid us of Daylight Saving[s] Time, I would get “Make America Great Again” inscribed on my forehead. In March of 2019, he expressed on Twitter his openness to the idea, but as with most of his supposed policy ideas, he quickly abandoned it after apparently learning that it would require more than a tweet. He recently revisited the idea again, saying the Republicans will “use [their] best efforts to eliminate Daylight Saving Time,” which he described as “inconvenient and very costly” to our nation.

Now, a deal’s a deal, so in the unlikely event that Congress actually takes action on this, I’m going to either Make My Forehead Great Again or live with being a raging hypocrite. But it did cause me to actually consider — is the elimination of the time change actually what I want? Personally, I prefer when [in my area of the country] the sun sets as late as 8:40 p.m. in the summer, so count me in favor of making Daylight Saving[s] Time permanent. But I consulted an astronomical table and realized that without “falling back” in November, the sun would rise after 8:00 a.m. for three months out of the year, and as late as 8:30 for the month following the winter solstice. Which seems like a steep price to pay to keep sunset after 5:00. But if we went the other way and kept the inaptly-named Standard Time year-round, the sun would rise before 5:00 a.m. for two months during the summer, but never set later than 7:40 p.m. And nuts to that! No one needs sun when the clock starts with a 4 [that joke works better on 24-hour time].

It’s a sign of maturity, I think, to accept that there are precious few easy solutions in life and that most decisions involve tradeoffs between suboptimal choices. So yeah, it sucks that the sun sets before 5:00 for most of the winter. Would I prefer the sun didn’t rise until after 8:00 instead? Not really. It sucks losing an hour of sleep in March, but is it worth the sun coming up at 4:40 in June? Not really. So best options seem to be to continue gritting through two clock changes a year, or move closer to the equator. Live and learn, I guess. Woodrow Wilson was still a racist asshat, regardless.

Occasional Trivia

Answer from last time:

Category: U.S. Financial History

Clue: This president who vetoed the legislation to establish the Second Bank of the United States would later end up on one of its bill denominations.

Andrew Jackson

Today’s clue:

Category: Supreme Court Decisions

Clue: Expanding press freedom, this decision rejected a charge of libel in 1964 after an Alabama police commissioner sued a northern newspaper.

Dispatches from the Homefront

My wife and I jokingly refer to our daughters as “the tall one” and “the short one,” but we came to the realization the other day that that formulation may not hold much longer. Much like my brother and I, my older daughter is small for her age while the younger one has the appetite of a college kid who just finished a bong session. [From roughly the time I was eight years old, despite a four-year gap between us, I could no longer plausibly call my brother my “little” brother; he was my younger brother. He was on the offensive line in high school; I played horn in the marching band. We grew at different rates, is my point.] Anyway, there’s only a two-year gap between my daughters so it’s not inconceivable that the younger one could overtake the older one at a fairly young age. People already ask if they’re twins at an alarming rate.